The Public Lab Blog

stories from the Public Lab community

About the blog | Research | Methods

Frac Sand Mining: The community fight

This morning @Pat Popple, Public Lab Organizer from Chippewa County WI, posted a presentation in her online publication the Frac Sand Sentinel. The presentation was given by community member and landowner, Johnne Smalley, at the Chippewa County’s Land Conservation and Forestry Department’s monthly meeting.

For those who haven’t been following the frac sand issue, the first permitting for frac sand mining in Wisconsin started in late 2006 Pearson, 2015. As of December 2015, Wisconsin had 129 industrial sand mining facilities, ranging in size from 9 to 4000 acres Wisconsin DRN, 2015. Dozens of groups and thousands of people have participated in the fight against the frac sand mining industry's negative effects. Learn more about the groups involved and what they are doing on the Wisconsin page.

I’m posting Johnne Smalley’s speech to the Chippewa County’s Land Conservation and Forestry Department as an example of the incredible amount of work, collaboration and passion it takes for individuals to take on environmental injustices in their community. In this speech, Johnne highlights that it’s not just the industry that’s the problem, it’s the policies that should be in place protect our health and environment. It’s the responsibility of government to enforce policies that exist. Finally, it's the money that drives the system to protect the economic interests of industry instead of people.

Hats off to Johnne for her speech, to @Pat and all those who constantly support and share out about the fight they’ve been in for nearly 10 years:::

"My name is Johnne Smalley. I own and pay taxes on land in Wheaton Township in Chippewa County. I am here today to find out what Chippewa County envisions for its future.

I have read Chippewa County’s Comprehensive Plan, but I don’t see the county following it. Page 173, Section 6.4 states:

Goal 1 - Maintain the physical condition, biodiversity, ecology, and environmental functions of the landscape, including its capacity for flood storage, groundwater recharge, water filtration, plant growth, ecological diversity, wildlife habitat, and carbon sequestration.

Goal 2 - Maintain the capacity of the land to support productive forests and agricultural working lands to sustain food, fiber, and renewable energy production.

How many acres of land have been removed from productive forests and agricultural working lands to support frac sand mines owned by and operated for the financial benefit of people that are not from our area, often not even from our state, and sometimes, not even from our country? How have all these frac sand mines maintained the physical condition, biodiversity, ecology, and environmental functions of the landscape, including its capacity for flood storage, groundwater recharge, water filtration, plant growth, ecological diversity, wildlife habitat, and carbon sequestration? What I’m seeing is a bunch of eyesores scarring our land, devastation of forested hillsides, businesses that were dependent on tourist trade closing, increased costs for agricultural businesses dependent on rail transport of fertilizers into the area and corn out of the area, decreased wildlife habitat resulting in increased crop destruction as the wildlife relocate into adjacent cropland, and tons of colloidal clay from their ponds washing into our trout streams and ruining the trout habitat. There are toxic levels of silica 2.5 dust in the air which affect our health and probably animal health. In other localities near frac sand facilities, veterinarians have noticed increased fertility problems including a significant lower conception rate and higher rate of stillborn and weak calves. There have been similar reports by farmers near mine sites in Chippewa County. Coincidence?

I’m also seeing a tremendous increase in the number of homes for sale around these sites and at greatly reduced prices. Some people have given up and just walked away from their home to move elsewhere. Now I am seeing the approval of another reclamation permit for a 1300+ acre frac sand mine, processing plant, and trans-load station. This permit has been granted to a company with a known history of disregarding DNR regulations that protect our groundwater from contamination.

I have also read a good bit of The Chippewa County Code of Ordinances. The Chippewa County Code of Ordinances Chapter 30, Sec. 106 lines 741-744 states: “Sec. 30-106. Permit denial. An application for a nonmetallic mining reclamation permit shall be denied if any of the factors specified in Wis. Admin. Code NR § 135.22 exist. NR 135.22 Denial of application for reclamation permit, clearly states, “An application to issue a nonmetallic mining reclamation permit shall be denied if (c) 1. The applicant, or its agent, principal or predecessor has, during the course of nonmetallic mining in Wisconsin within 10 years of the permit application or modification request being considered shown a pattern of serious violations of this chapter or of federal, state or local environmental laws related to nonmetallic mining reclamation.” Northern Sands, LLC has more than 20 DNR violations of inappropriate exploratory borehole abandonments in Chippewa County. Leaving holes open can create a direct conduit for entry of contaminants to waters of the state and is a serious violation of ch. 281, Wisconsin Statutes and ch. NR812, Wis. Adm. Code. (Just ask anyone who has to drink water from an aquifer that has had liquid manure dumped down a hole into it).

The proposed post−mining land use given in 3.0 of the Howard Township Properties Nonmetallic Mine Reclamation Plan “include a combination of commercial and passive recreational uses....Approximately eighty-five percent of the site will be reclaimed as prairie grasslands: approximately fifteen percent of the area will be reclaimed as woodland.” The Chippewa County Land Conservation and Forest Management staff can explain better than I can that prairie grasslands are not the same as productive agricultural cropland that sustain food, fiber, and renewable energy production. (See goal 2 from Chippewa County’s Comprehensive Plan as quoted above.) NR 135 also states, “The proposed post−mining land use shall be consistent with local land use plans.” In addition, State law Sec.66.1001. Wis. Stats. requires that local land use-related decisions be consistent with the goals and objectives of that community’s comprehensive plan. I am not seeing how taking more and more productive cropland and forest away to return it to native prairie “maintains the capacity of the land to support productive forests and agricultural working lands to sustain food, fiber, and renewable energy production”.

I would also like to question why Chippewa County is not requiring an independent expert or consultant to do the monitoring and reporting of this mine site with reimbursement costs paid back to the county by Northern Sands. This permit allows Northern Sands to do their own checking and reporting. Their history has shown how well they have done that in the past. On multiple occasions, their actions and reports have been fabricated and falsely reported to both the Howard Town Board and the Wisconsin DNR. Having county personnel or even state personnel checking to make sure the monitoring and reporting is being done accurately is just adding to the taxpayers’ burden. With Northern Sands history, they will need close oversight and this cost should fall back onto Northern Sands—not the taxpayers.

An agency-designated consultant with recognized experience in the areas of financial assurance and reclamation should also be required to evaluate any financial assurance given by Northern Sands with the costs incurred paid by Northern Sands. Reclamation Surety Bonds for other mining endeavors have proved inadequate in the past. Repeatedly, the Surety Bonds have been for inadequate amounts. They may cover the cost of reclamation as outlined, but usually fail to cover any problems that may occur—especially the cost of re-working an area where reclamation failed and the cost of pollution clean-up. Also, there is a history of Surety Bond issuers failing when it comes time for the actual reclamation. In some instances there has been a close tie between the surety bond company and the mine owner.

In conclusion, I would like to repeat my question of how the Chippewa County envisions its future and how its actions in permitting these frac sand mines support this vision. Thank you."

Follow related tags:

wisconsin air-quality blog frac-sand

[reference] "Carbon Bombs" or "Climate Bombs" --EcoFys analysis for Greenpeace

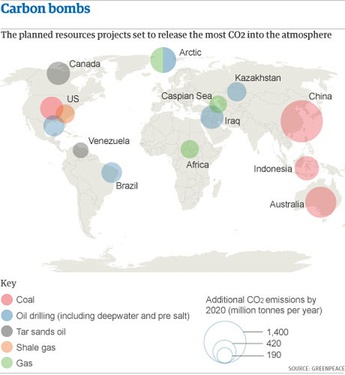

During the Open hour on COP21, there was mention of the term "Carbon Bomb" and discussion of an EcoFys report ("Point of No Return") on 14 industrial projects most likely (in 2013) to disrupt the Climate by increasing industrial carbon emissions by 2020--in some cases, the carbon emissions potential for any one of the projects exceeds the carbon budget for the entire planet (565 MT by 2015, according to Carbon Tracker).

This report informs much climate activism, especially from Greenpeace. Commissioned by Greenpeace, it attempts to rank the most dangerous fossil-fuel projects currently being planned. The metric is simple: how many additional tons of CO2 the project will emit by 2020. (See the report for more on methodology.)

Report

link http://www.greenpeace.org/international/Global/international/publications/climate/2013/PointOfNoReturn.pdf

Voohar and Myllyvirta, 2013. Point of No Return: The massive climate threats we must avoid. Greenpeace International, Amsterdam, The Netherlands, January 2013

Written by:

Ria Voorhar & Lauri Myllyvirta

Edited by:

Brian Blomme, Steve Erwood,

Xiaozi Liu, Nina Schulz,

Stephanie Tunmore, James Turner

Published in January 2013 by

Greenpeace International

Ottho Heldringstraat 5

1066 AZ Amsterdam

The Netherlands

greenpeace.org

News Coverage

Qua the Gulf Coast --US's island nation, internally colonized

Louisiana needs "1.5 to survive" --the state is already experiencing population dislocation. Louisiana is also politically dominated by companies involved with several of the projects. The US is involved with 5 of the projects. The supply chains of these projects touch Louisiana and Texas.

GOM Offshore Directly. Here's how it ranks among a dirty dozen of climate wrecking projects:

China’s Western provinces / Coal mining expansion / 1,400

Australia / Coal export expansion / 760

Arctic / Drilling for oil and gas / 520 (dead 2015?)

Indonesia / Coal export expansion / 460

5. United States / Coal export expansion / 420 (limited in 2015)

Canada / Tar sands oil / 420

( Oil trains through wetlands in Pass Manchac, Valero Refinery in NORCO, waste processing at Shell / IMTT, waste Pet Coke goes to IMT and UBT )

Iraq / Oil drilling / 420

8. Gulf of Mexico / Deepwater oil drilling / 350

Brazil / Deepwater oil drilling (pre-salt) / 330

Kazakhstan / Oil drilling / 290

11. United States / Shale gas / 280

Africa / Gas drilling / 260

Caspian Sea / Gas drilling / 240

Venezuela / Tar sands oil / 190 (see other tar sands)

Follow related tags:

gulf-coast research blog climate-change

Ground Truth in the Negev-Naqab Desert, Israel

https://mapknitter.org/embed/el-naqab

A three days event in the south of Israel, in the unrecognized Bedouin villages, that are frequently demolished by the Israeli authorities. Al Araqib, in which the event took place is a central node of the Bedouin struggle, after 92 demolitions in the past 6 years its residents are staying put. Aerial photographs are one of the most major tools for Al Araqib's residents to prove their presence on this land since more than a hundreds year ago. We worked with them for creating high resolution aerial photographs, as a process to document the village as it is today, detect the changes that occurred each demolition and reconstruct evidences of violence and destruction that are inscribed onto the land. The aerial photograph above is the first stitch, to be continued, of images taken under far from ideal weather conditions, on the last days of 2015, 31-1 January. The village's cemetery can be seen in the stitch, the only structures that are were never demolished. More details below

A 3D image (sparse cloud point) created by Ariel Caine that shows the kite's movements above the al-Turi cemetery:

Ground Truth: Testimonies of Dispossession, Destruction and Return in the Naqeb/Negev Al-Araqib, January 1-2, 2016

Exhibition | Launching: The Conflict Shoreline (Babel Publication) | Launching: Zochrot’s Truth Commission Report | Aerial Photography Workshop | Oral Testimonies

Zochrot and the inhabitants of Al-Araqib and the Forensic Architecture project at Goldsmiths, University of London organzied a gathering at the village of Al-‛Araqib in the Naqeb/Negev that will include the final session of the Truth Commission on the Events of 1948-1960 in the South. The events will be held in two tents built especially onsite. Given the recent and ongoing rejection of the Bedouins’ legal claims and the recurring brutal destruction of “unrecognized villages” such as Al-Araqib, we will examine the conditions for the production of truth in a neocolonial age through oral testimonies and memories, aerial photography, studies by Negev researchers and historical maps to offer an alternative conceptual horizon on civil society’s ability to re-appropriate its truth.

Curation and project management: Debby Farber

Together with: Eyal Weizman

Tent planning: The inhabitants of Al-Araqib together with Sharon Rotbard

Aerial photography workshop and exhibition: PublicLab:Jerusalem, Hagit Keysar, Ariel Caine

Archival Documentation: Miki Krazman

The project is a collaboration with Sheikh Siah Al-Turi, Aziz Al-Turi, Salim Al-Araqib, Ahmad Khalil Abu-Madighem, Awad Abu-Farih, Nuri Al-Uqbi and all the inhabitants of Al-Araqib.

Follow related tags:

mapknitter israel blog barnstar:photo-documentation

Thank you for another wonderful year of Public Lab!

Last week marked my three year anniversary as Public Lab staff, though I’ve been working with Public Lab since the mapping of the BP disaster. It’s amazing to think how far we’ve come as a community and an organization since our scrappy band of aerial mappers spread across the Gulf Coast in the wake of the oil spill, mapping hundreds of miles of spill with just a few shared kite and balloon kits.

Looking back on 2015 with my usual December nostalgia, I’m so proud of the work we’ve accomplished this year. We wrapped up an great project in partnership with the EPA Urban Waters program and our Gulf Coast community to map wetland restoration projects around New Orleans, marking the end of our first Federal grant partnership - a project that wouldn’t have been possible without Stevie and our Gulf Coast crew. We also celebrated Public Lab’s 5th anniversary by sharing funny, inspiring and heartening memories from the community. And we hosted another round of Barnraisings, this year in Chicago and New Orleans, where we welcomed new partners to the community, met online collaborators in person for the first time, launched The Barnraiser daily news, and ate an unimaginable amount of tacos.

This is all to say THANK YOU to the entire Public Lab community for another great year of collaboration, creative thinking, and camaraderie. What was your favorite Public Lab moment in 2015 and what do you aspire to in 2016?

Follow related tags:

blog

Simple Aerial Photomapping at EWB West Coast Regional Conference, Cal Poly, 2015

What I want to do

Continue to make potential users like Engineers Without Borders teams aware of the Public Lab DIY mapping tools. For those who need up to date, high-resolution imagery, this technique is so accessible that it should not be overlooked.

My attempt and results

I did a workshop at the Engineers Without Borders Regional Conference, at Cal Poly on 11/14/15. I've done this the last six years, at the Regional conferences on the Peninsula at YouTube's HQ, in Portland, at Cal Poly, in San Diego and at Davis. I also presented a poster at EWB-USA International Conference.

Again, the 60-minute session went by in a flash. Get ready ahead of time. Get balloons or kites ready. Be ready to attach rig. Be ready to go. Again it paid off. We had trouble in that the small balloon configuration with a pair of small Mobius cameras was pushed horizontally down and across the nearby building. However, we were able to get altitude and reel in our balloons without incidence. However as a result the imagery was relatively low altitude.

Ground based photos are here:

There was interest in the autostitching tools, as a way to speed up the processing. I also noted the AutoDesk's free Windows PC, IOS and Android tablet based 123DCatch replaced pure web-based version, and does offer the ability to process up to 70 images to produce a 3D model.

In the interest of time, I used Photoshop's Photomerge feature to autostitch a set of photos and place the composite image using Mapknitter.

https://mapknitter.org/embed/2015-ewb-regional-at-cal-poly

Questions and next steps

Continue to explore where Mobius cameras with non-fish eye lenses and small kites or balloons fit into the tool set and make sense to use. It is worth noting that the Mobius camera rolling shutter and the inherent movement of the camera result in numerous images where linear features are warped or distorted and not optimal, or even useful, for mapping. However, typically a flight results in a sufficient number of relatively undistorted images that are useful.

Why I'm interested

Kite and balloon aerial photomapping is a useful technique for those who need up to date, high-resolution imagery. This technique is so accessible that it should not be overlooked.

Follow related tags:

balloon-mapping mylar bap blog

Cross-posting:: The Tapajos River communities begin to monitor water quality with sensor

This is a cross-posting from InfoAmazonia's blog page, and google-translated from Portuguese. Check out the original post with pictures here. The above photo came from InfoAmazonia.

Neat project, we were lucky to hear more about it at this year's Barnraising from @vjpixel.

Blog post by: Giovanny Vera 11/24/2015 17:20

Did you know that 73% of Brazil's fresh water is in the Amazon? But, however, the water content addressed in this region is about 22% less than the rest of Brazil?

With this in mind, the Network Project InfoAmazonia developed with support from Google Social Impact Challenge, a low-cost monitoring system that analyzes water quality for human consumption in the Amazon. We are also creating an articulated monitoring network with communities. The water data collected by Mother Moorhen sensor will be shown in real time on InfoAmazonia site and alerts are sent to consumers via SMS.

"What we do is monitor this water is untreated water that people gather from different sources, such as wells, ponds or even directly from the river," said VJ pixel, Network coordinator InfoAmazonia. "We consider that our project is precisely to give relevant information to people about the water they are consuming," he adds.

During the month of October, InfoAmazonia Network team was in the region of the Lower Tapajós, Pará, to conduct training workshops for the installation and maintenance of the sensors in Santarém, Belterra and Mojuí dos Campos. In full sensors were installed in 18 points of these municipalities, both in communities, villages and cities.

The mission began in Santarém on 13 October with events on 14, 15 and 16. In the first two days happened electronics workshops in partnership with the Health & Joy Project and the Federal University of Western Pará (UFOPA) a basic electronics and other advanced electronics. On the last day, was presented the design of quality sensors water InfoAmazonia Network and made operational demonstration Mother of the water. During this workshop were chosen, with the decision of the participants, local that would be monitored. Points Once defined, the volunteers were responsible for making contact with the responsible and ensure a minimum structure (access to the site, stairs and electricity) for installation along with the project team.

After defining the points to be monitored, three teams were created to carry out installation of the sensors. One remained on the right bank of the Tapajós River to facilities in the urban area of Santarém, Belterra, National Forest Tapajos and Mojuí dos Campos. The other teams crossed to the left bank to carry out the facilities in communities and villages in the Extractive Reserve (Resex) Tapajós-Arapiuns.

The first installation was made on Saturday the 17th, at the headquarters of the Health & Joy Project, and other facilities were soon made. In Resex seven points were installed, one of them in an Indian village. Each installation lasted between 1:30 a.m. and 3 hours, with a direct participation of the inhabitants of each monitoring point.

The main difficulty technique was that "we had hoped that the water tanks stay full most of the time, and found that they empty with a very high frequency," said Pixel. The solution was to place a small container in the water tank with sensors so that if the water of the water tank runs out, they will continue to be submerged. If the sensors remain out of water for a long time they stop working.

The participation of volunteers and officials was one of the most valuable contributions of the communities. Local and residents own leaders understand the need to know the quality of the water they use and drink in their homes. "Communities are the direction of the project. The project was created for communities to have an information network that help them better manage alternative systems and the charge of the competent bodies the quality of water supply guarantee, "said Gina Leite, the InfoAmazonia Network.

The communities that were installed the equipment, InfoAmazonia Network only guided the installation. So the first stage of training was more theoretical, and the second part was a practical training, explained Pixel. It was done that way because it is necessary that the person knows to get the equipment for both lead to another place as to service. "We need to empower people in the misuse of the equipment and also in design methodology," says Pixel.

"The project, besides being innovative brings the issue of transparency regarding information on water quality. Having control of water quality in real time and accessible via the Internet is an extraordinary contribution to the quality of life "Paulo Lima, Health & Joy Project Currently the product is in the validation phase of the information that is picking up and is a very important step to create reliability in the data, says Gina Leite. Then the site will be launched to give visibility to local conditions. "We hope that the initiative provokes more interest communities, schools, universities, the media about the importance of water for health," he says.

"It is important that the very UFOPA, academics, and other organizations in the region to take ownership of the project, so that the continuity of the project does not depend on us, he become independent", recognizes Pixel. Likeminded is to Paulo Lima, Health & Happiness Project, who believes that the project should be replicated to a greater number of communities and continue its technological development to expand its analytical capacity of the water.

For InfoAmazonia Network still lack enough way to walk, to pass on knowledge and experience to learn. For now, the work continues, perfecting the system and also pointing to the dissemination of information on water quality in the Amazon, leading a transparent information to the community so that their rights are respected, and that their leaders fight for health and also the Amazon.

Follow related tags:

blog